I am a CS PhD student at Mila and Concordia University advised by Prof. Eugene Belilovsky. My research is supported by FRQNT and Frederick Lowy Scholars fellowships.

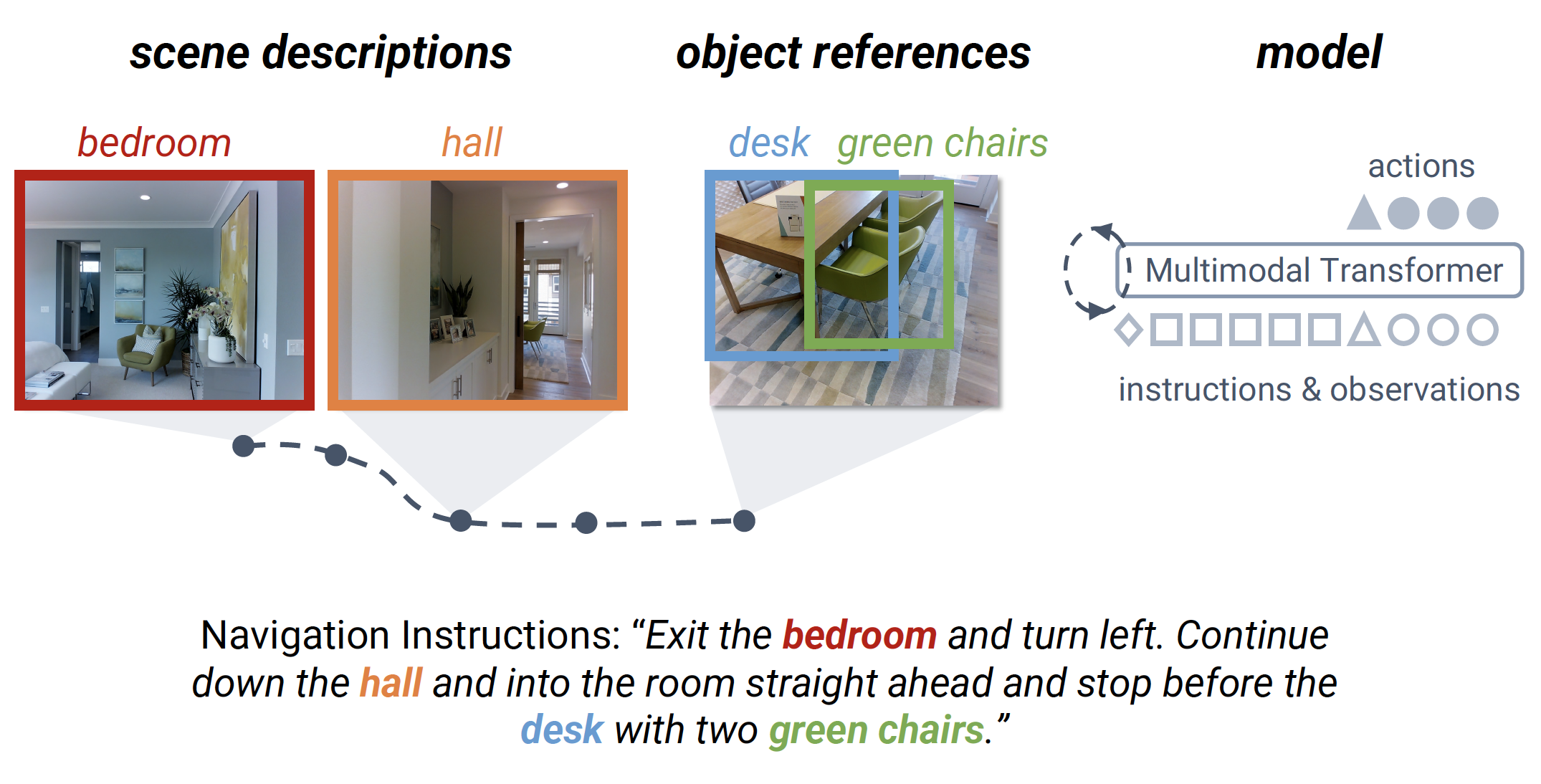

Before starting my PhD, I was a Visiting Scholar with Prof. Devi Parikh and Prof. Dhruv Batra at Georgia Tech, where I worked on building multi-modal embodied agents which can navigate in a photo-realistic environment using visual and language cues.

I completed my Bachelors and Masters in Electronics and Communication Engineering (ECE) in 2019 at IIIT Hyderabad, where I pursued research in long-term visual object tracking with Prof. Vineet Gandhi. My Masters thesis is available here.

During my Masters, I also spent two wonderful semesters at UC San Diego and Stanford University in 2018. I worked with Prof. Sicun Gao at UC San Diego on sample efficient Reinforcement Learning algorithms for Atari games. At Stanford, I collaborated with Prof. Noah Goodman on recognizing humor in text.